Single-threaded Event Loop Architecture for Building Asynchronous, Non-Blocking, Highly Concurrent Real-time Services

Real-time services like chat apps, MMO (Massive Multiplayer Online) games, financial trading systems, apps with live streaming features, etc., deal with heavy concurrent traffic and real-time data.

These services are I/O bound as they spend a major chunk of resources handling input-output operations such as high-throughput, low-latency network communication (between the client and the server and other application components), real-time DB writes, file I/O, communication with third-party APIs, streaming live data, and so on.

For reference, IO-bound operations depend on the server’s input-output system. The faster it is, the faster the operations execute. Any delay in an IO process, like writing data to the disk, can cause a system bottleneck. In an IO-bound system, the CPU is relatively less used and it might also have to wait for an IO process to complete to execute a process.

In a CPU-bound system, the operations depend on the CPU’s speed. The system spends most of the time executing processes in the CPU(s) as opposed to communicating with external components. The higher the CPU efficiency, the better the system’s performance.

In a memory-bound system, the major computation depends on the amount of memory the processor contains to store the data required for compute and the efficiency of the memory access operations.

Memory-bound systems have high memory (RAM) usage. They may compute extensive data sets into memory, requiring significant RAM. In these systems, slow and limited memory may cause a system bottleneck.

Cloud providers provide dedicated memory, CPU and I/O optimized instances as per the use case for running the workloads.

Related read: How modern cloud servers leverage different system architectures to optimize parallel compute

Key processes in a real-time concurrent application, as discussed above, like high throughput network operations, database writes, inter-component communication etc., introduce latency in the system due to IO operations.

To keep the latency low, different strategies, such as non-blocking IO, asynchronous event processing with single-threaded architectures, actor model, reactive programming etc., are leveraged by different web technologies and frameworks in the respective ecosystem to implement scalable real-time services.

In this article, I’ll discuss the event-driven single-threaded architecture leveraged by technologies such as NodeJS, Redis, etc., to deal with a large number of IO-bound processes.

With that being said, let’s get started.

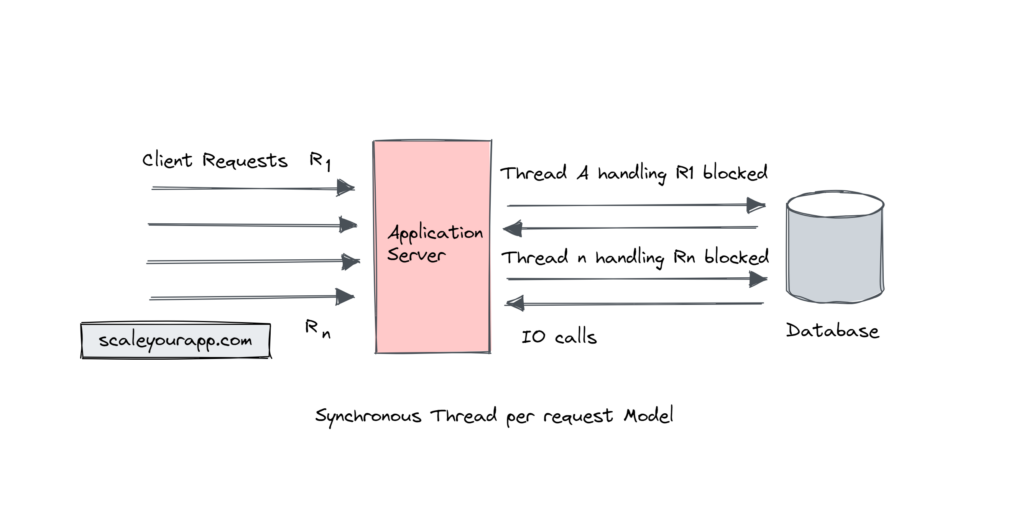

Before delving right into the single-threaded architecture, let’s understand the issues with the conventional request per thread-based model that spawned the need for different strategies to be implemented to handle IO-bound operations efficiently.

The IO Bottleneck With Thread-Based Synchronous Model

With the conventional thread-based synchronous model that the application servers leverage to handle client requests, large-scale IO-bound services face a request throughput bottleneck.

If I take the example of the Apache Tomcat server, when it receives a client request, the request is assigned to a worker thread from the thread pool it maintains to process the requests.

In an IO-bound application, most requests will perform IO operations, for instance, firing a query to the database. In this scenario, as long as the request from the server waits for the response from the database, the worker thread is temporarily blocked. It cannot process other requests made to the server.

Every request to the server will temporarily block a worker thread as it waits for the response from the database. Once the database returns the response and the application server returns the response to the client request, the worker thread is released back to the thread pool to handle the other incoming requests.

Though modern application servers are designed to handle concurrent traffic efficiently, in the case of high concurrent traffic with IO-bound processes, this thread blocking behavior will lead to resource contention, reduced concurrency and performance bottlenecks.

To deal with this issue, highly concurrent IO-bound applications are non-blocking in nature.

Tackling the IO Bottleneck Issue

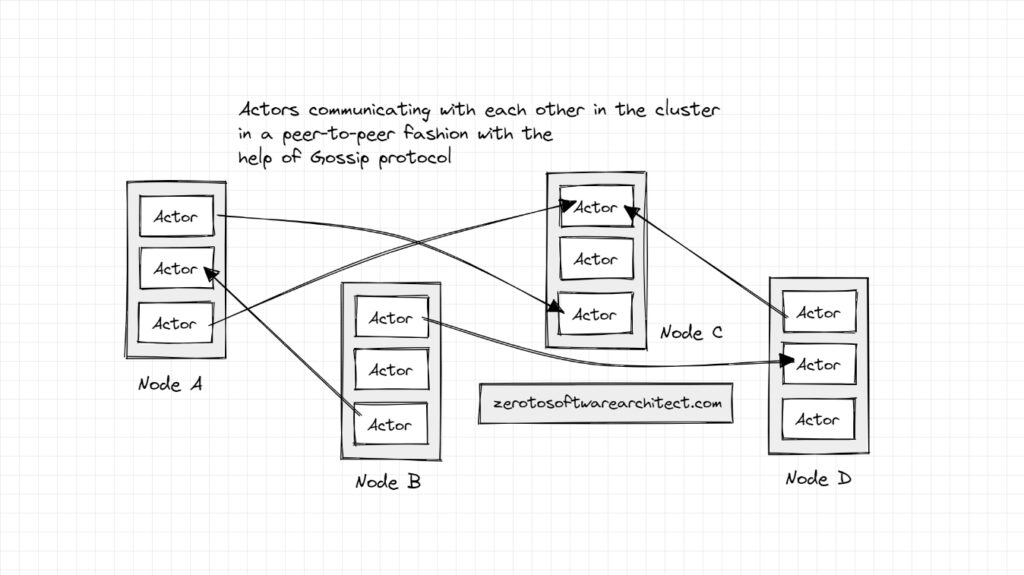

Several asynchronous approaches (Single-threaded event loop model, Actor model, and Reactive Programming) are adopted by different programming languages and the technologies in the respective ecosystems to handle the synchronous request blocking issue.

Single-threaded Architecture

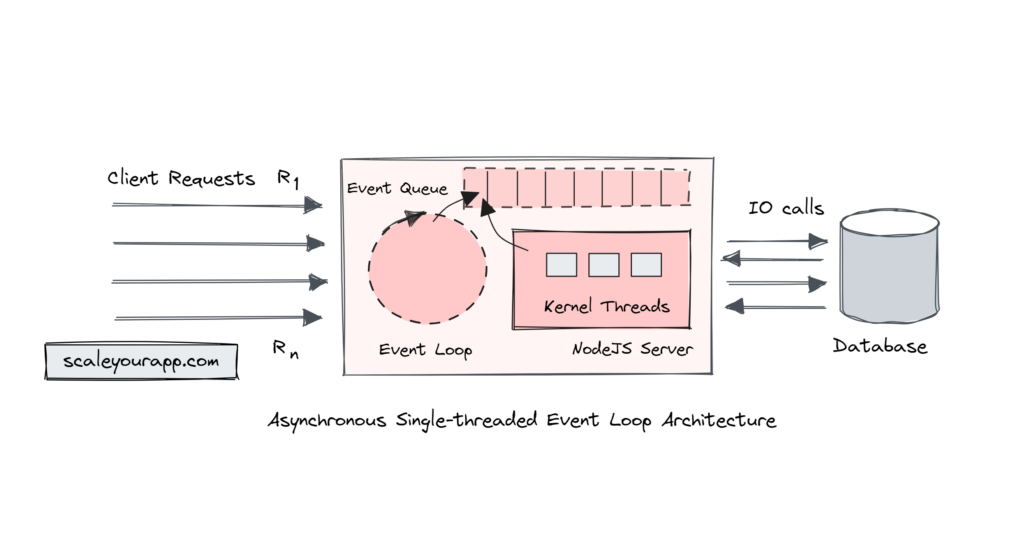

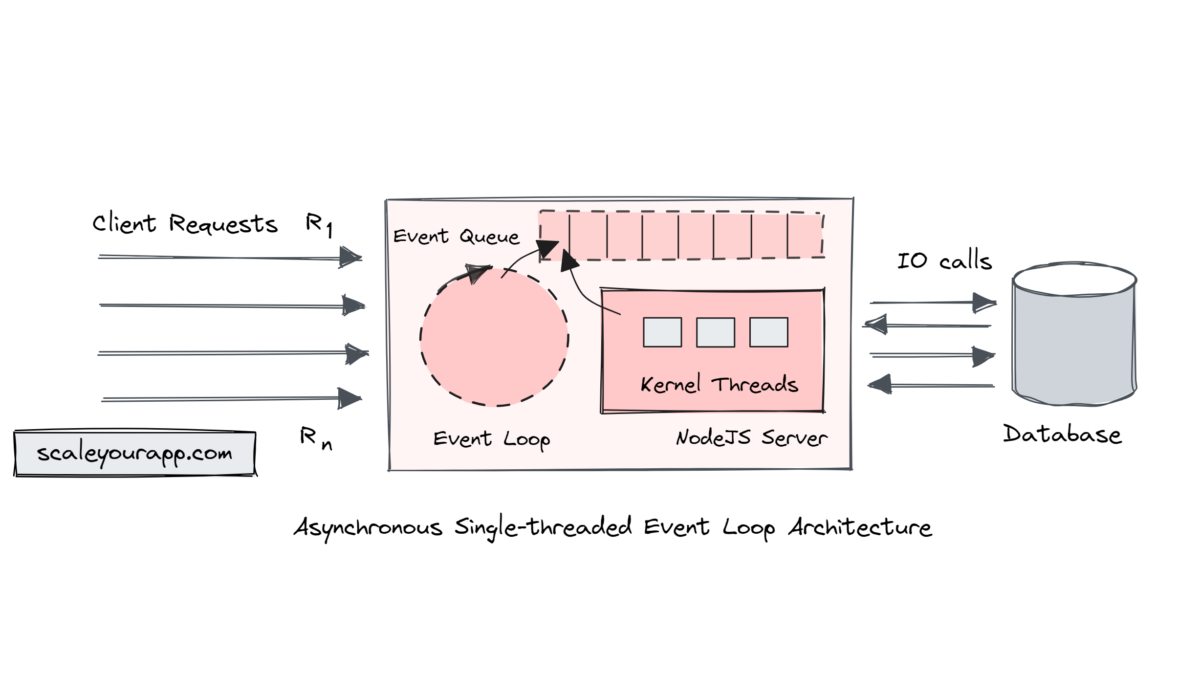

NodeJS Single-threaded Event Loop Architecture

NodeJS, from the bare bones, is designed to efficiently handle a large number of concurrent requests and asynchronous IO operations with minimal overhead due to its single-threaded event loop architecture. The event loop, which is the main and single thread, handles all the client requests, delegating all the IO operations to the OS kernel.

Since all the IO tasks are delegated to the OS kernel, the event loop can tackle future client requests without being blocked. NodeJS maintains an event queue to register the pending events and callbacks. This queue is polled at regular intervals in a non-blocking way by the main thread.

So, for instance, when a client sends an IO-bound request to the NodeJS server, an event is triggered and the event loop delegates the IO operation to the OS kernel, registering a callback in the event queue. The OS kernel maintains a pool of low-level threads that it leverages to complete the IO operation.

Once the IO operation is completed, for instance, getting the response from the database, an event is triggered by Node runtime, indicating that the query processing is complete. The event loop now executes the callback registered in the event queue.

The callback is a function that is executed when the IO process is complete. Here, the event loop constructs a response with the information fetched from the database and sends it back to the client.

The callbacks registered in the event queue are processed by the event loop in the order they are added.

Since NodeJS, unlike Tomcat, does not associate a thread with every client response, rather relies on the non-blocking IO operations provided by the underlying OS to execute IO operations, this reduces thread creation, management and context-switching overhead in NodeJS, making the request-response flow more resource efficient.

The IO operations parallelism happens at the OS level while NodeJS remains single-threaded. This non-blocking behavior makes it suitable for implementing highly concurrent IO-bound services like MMO games, chat apps, etc.

Tomcat’s or any other server with blocking synchronous behavior suits CPU-bound tasks where each request has its own thread and can leverage multiple CPU cores.

For CPU-bound tasks, NodeJS, similar to Tomcat, maintains a thread pool that it leverages to perform parallel tasks leveraging multiple CPU cores. This worker thread pool isn’t used for IO operations. The IO operations are delegated to the Kernel threads instead.

Redis Single-threaded Architecture

Similar to NodeJS, Redis also leverages the single-threaded event loop architecture to handle a large number of concurrent requests. When dealing with a large number of requests, it does not create dedicated threads to handle requests but leverages the underlying OS thread pool to process the IO operations.

The event loop, acting as a single thread, handles the events, enabling Redis to be highly responsive with a non-blocking behavior. If you wish to go into the details, check out this resource.

Single-Threaded Event Loop Architecture, Actor Model, Reactive Programming

As mentioned above, single-threaded event loop architecture, actor model and reactive programming are three different programming paradigms intended to handle concurrency in web-scale services.

The actor model is designed to be non-blocking and message-driven with high throughput in mind. The core processing unit in an actor model is an actor that can communicate with other actors in the system by the exchange of messages. You’ll find a detailed discussion on the actor model in the below posts on this blog:

Understanding the Actor model to build non-blocking, high-throughput distributed systems

How Actor model/Actors run in clusters facilitating asynchronous communication in distributed systems

I’ve discussed the single-threaded event loop architecture and the actor model thus far on this blog. Reactive programming focuses on reacting to asynchronous events and data streams. I’ll be discussing it in my upcoming blog posts. You can subscribe to my newsletter if you wish to see the content slide into your inbox as soon as it is published.

Meanwhile, you can read how PayPal processes billions of messages per day with reactive streams.

Technologies for Building IO-bound Concurrent Non-blocking Applications

Some programming languages are written from the bare bones to handle IO-bound processes efficiently. Some leverage different frameworks to implement non-blocking services. It largely depends on the development team’s experience with the technology, the learning curve, the use case, the community, ecosystem support, performance needs, licensing costs and a lot more factors to pick the fitting technology.

Here are some programming languages and the associated frameworks that are leveraged to write highly concurrent real-time asynchronous event-driven non-blocking applications.

NodeJS

NodeJS, as discussed above, is designed from the bare bones to build highly concurrent applications. NestJS & ExpressJS frameworks are largely used to build performant concurrent applications.

Java

Java supports non-blocking event-driven programming via frameworks like Project Reactor, Akka, Vertx, RxJava, Quasar, Spring WebFlux, etc.

Python

In the Python ecosystem, frameworks and libraries like AsyncIO, Tornado, Twisted, Sanic, Quart, FastAPI, etc., support non-blocking IO-bound application development.

Scala

Scala caters to a wide range of use cases, from writing concurrent web applications to asynchronous data processing. It has a rich ecosystem of non-blocking frameworks such as Akka, Play, Finagle, Monix, Cats Effect, Zio Zio, Lagom, FS2, and more.

Go

Go is a fitting language for building concurrent, non-blocking applications. The language is designed from the bare bones with concurrency in mind, with features like goroutines and channels and tooling supporting the implementation of highly concurrent services.

Kotlin

In the Kotlin universe, support for non-blocking applications is through frameworks and libraries like Vertx, Ktor, Project Reactor, Kovenant, ArrowFx, Netty, Spring WebFlux, etc.

Kotlin itself has a language feature called coroutines that is used for writing non-blocking and asynchronous code.

Rust

Rust is also used for building concurrent, scalable web services, though the ecosystem is relatively new compared to other programming languages.

The frameworks and libraries used are Tokio, Actix, Rocket, Warp, Hyper, Async-std, Tide, etc.

C#

In the C# universe, libraries and frameworks leveraged are ASP.NET Core, SignalR, Akka.NET, Rx.NET, Hangfire, Orleans, Grainfather, RestEase, etc.

Erlang

Erlang is widely used for developing non-blocking concurrent web services. It intrinsically supports concurrent processes via actors called Erlang processes, which are resource-efficient. The programming language has built-in fault tolerance. If one Erlang process crashes, it does not affect the stability of the entire system.

The low-latency asynchronous communication amongst application modules happens via message passing, supporting non-blocking operations. The libraries and frameworks in the ecosystem leveraged for building real-time apps are Cowboy, ChicagoBoss, Yaws, Nitrogen, MochiWeb, etc.

Check out the Zero to Software Architecture Proficiency learning path, a series of three courses I have written intending to educate you, step by step, on the domain of software architecture and distributed system design. The learning path takes you right from having no knowledge in it to making you a pro in designing large-scale distributed systems like YouTube, Netflix, Hotstar, and more.

Well, folks! This is pretty much it. If you found the content helpful, consider sharing it with your network for more reach. I am Shivang. Here are my X and LinkedIn profiles. You can read about me here.

I’ll see you in the next post. Cheers!

Shivang

Related posts

Zero to Software Architecture Proficiency learning path - Starting from zero to designing web-scale distributed services. Check it out.

Master system design for your interviews. Check out this blog post written by me.

Zero to Software Architecture Proficiency is a learning path authored by me comprising a series of three courses for software developers, aspiring architects, product managers/owners, engineering managers, IT consultants and anyone looking to get a firm grasp on software architecture, application deployment infrastructure and distributed systems design starting right from zero. Check it out.

Recent Posts

- System Design Case Study #5: In-Memory Storage & In-Memory Databases – Storing Application Data In-Memory To Achieve Sub-Second Response Latency

- System Design Case Study #4: How WalkMe Engineering Scaled their Stateful Service Leveraging Pub-Sub Mechanism

- Why Stack Overflow Picked Svelte for their Overflow AI Feature And the Website UI

- A Discussion on Stateless & Stateful Services (Managing User State on the Backend)

- System Design Case Study #3: How Discord Scaled Their Member Update Feature Benchmarking Different Data Structures

CodeCrafters lets you build tools like Redis, Docker, Git and more from the bare bones. With their hands-on courses, you not only gain an in-depth understanding of distributed systems and advanced system design concepts but can also compare your project with the community and then finally navigate the official source code to see how it’s done.

Get 40% off with this link. (Affiliate)

Follow Me On Social Media