McDonald’s uses events across their distributed architecture for use cases such as asynchronous, transactional and analytical processing, including mobile-order progress tracking and sending marketing offers like deals and promotions to their customers.

As opposed to building event streaming solutions for every individual or a set of microservices in their system, they built a unified platform to consume events from multiple external and internal producers (services). This ensured consistency in terms of implementation and averted the operational complexity of maintaining different event-streaming modules.

A few design goals for this platform were scalability (the platform needed to auto-scale in case of increased load), availability, performance (the events needed to be delivered in real-time), security, reliability, simplicity and data integrity.

Kafka is leveraged to build their event-streaming platform and since their system was running on AWS, they leveraged AWS-managed streaming for Kafka (MSK) to implement the module. This averted the operational overhead of working with a different on-prem or public cloud service.

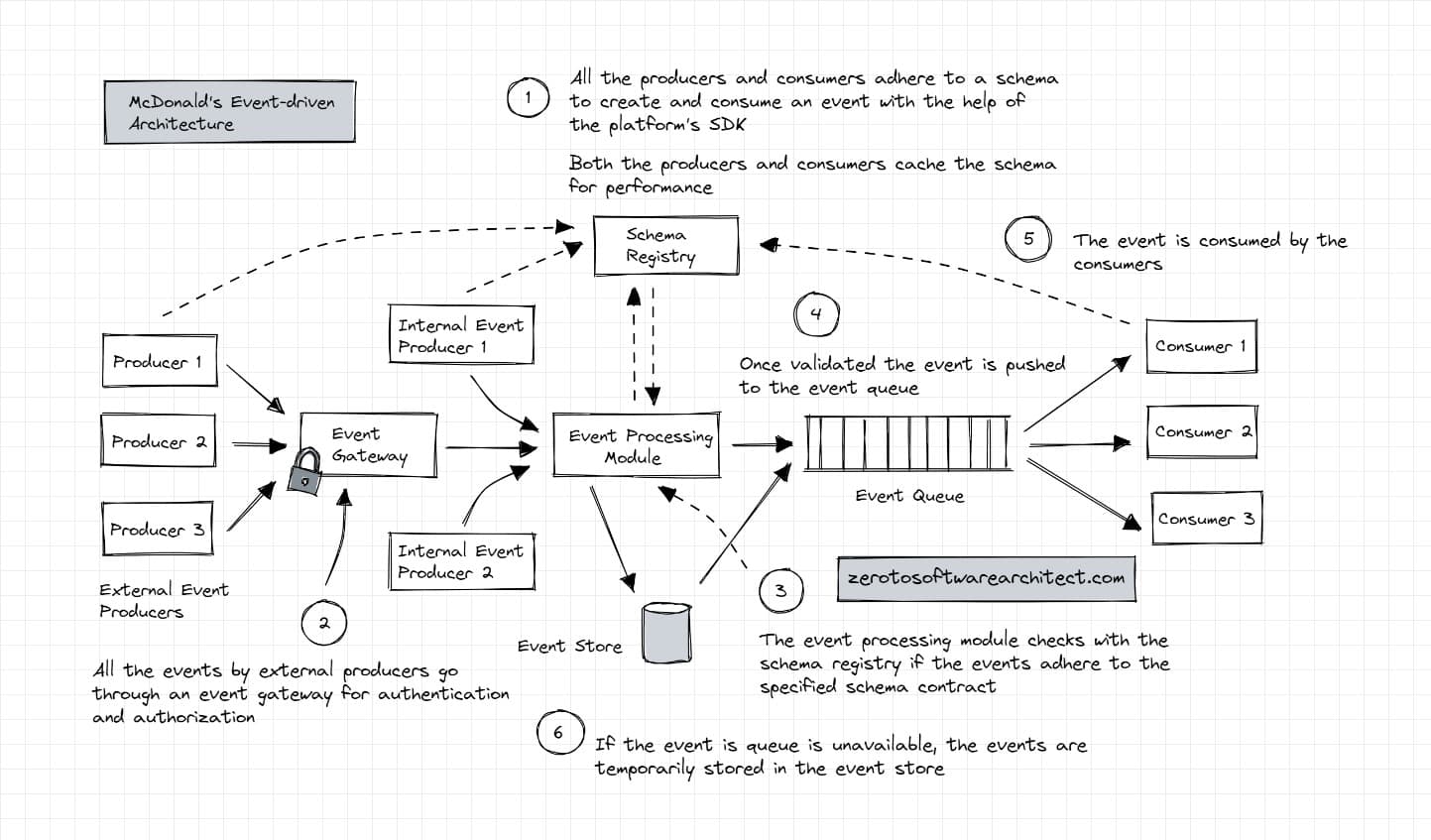

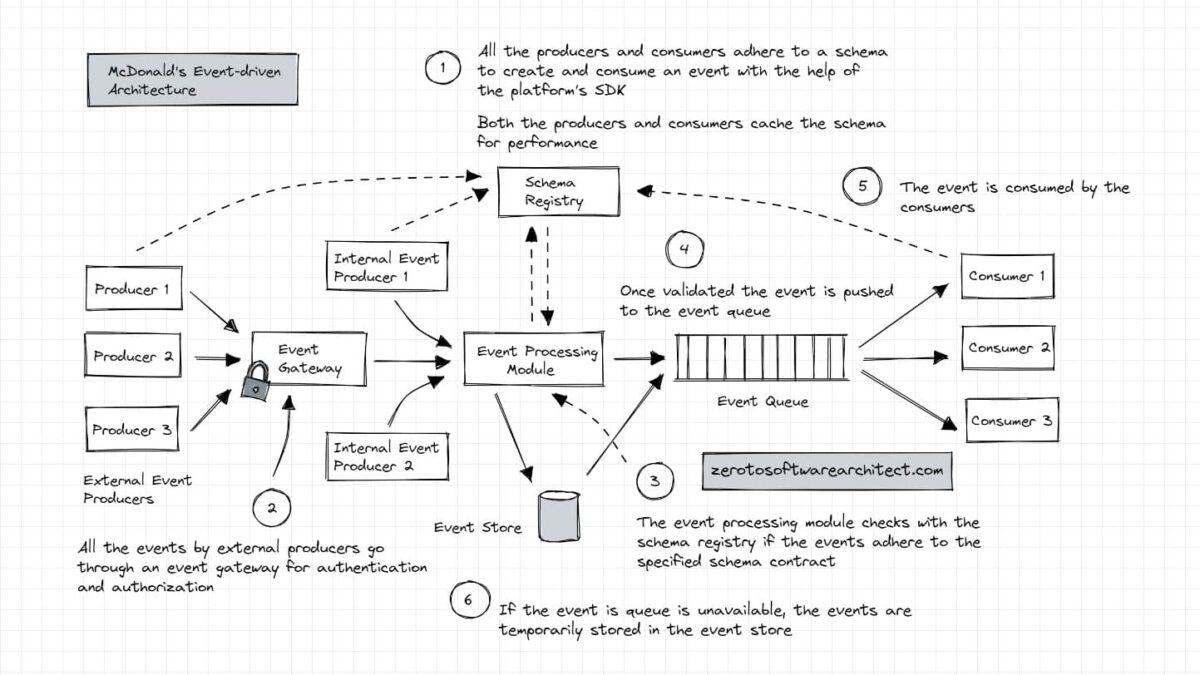

Since the unified platform consumed events from multiple producers and the events were consumed by multiple consumers, they achieved data consistency with the help of a schema registry that gave a well-defined contract to create events. The producers and the consumers adhered to the contract when working with events with the help of the unified event streaming platform SDKs.

In case the unified platform was down, an event store (AWS Dynamo DB) was kept as a temporary store for events until the platform bounced back online.

The events coming in through external applications pass through an event gateway for authentication and authorization.

All the failed events are moved to a dead letter queue Kafka topic. A dead letter queue is an implementation within a messaging system or a streaming platform that deals with events that are not processed successfully. The events in this topic are analyzed by the admins and are either re-tried or discarded.

A serverless cluster autoscaler function gets triggered when the message broker’s CPU consumption passes a specified threshold. The function adds more nodes to the cluster. And another workflow function is then triggered to partition events across Kafka topics. The topics are partitioned across shards based on the domain since the events flow in through several different producers.

If you wish to understand concepts like event streaming, designing real-time stream validation, serverless workflows, how Kafka topics are partitioned across brokers, Kafka performance, how distributed applications are deployed across different cloud regions and availability zones, designing distributed systems like Netflix, YouTube, ESPN and the like from the bare bones and more. Check out my Zero to Software Architect learning track.

The diagram below illustrates the flow of events through their unified event streaming platform.

Information source: Behind the scenes Mcdonald’s event-driven architecture

If you find the content interesting, you can subscribe to my newsletter to get all the content posted by me on this blog and my social media handles right in your inbox.

Shivang

Related posts

Zero to Software Architecture Proficiency learning path - Starting from zero to designing web-scale distributed services. Check it out.

Master system design for your interviews. Check out this blog post written by me.

Zero to Software Architecture Proficiency is a learning path authored by me comprising a series of three courses for software developers, aspiring architects, product managers/owners, engineering managers, IT consultants and anyone looking to get a firm grasp on software architecture, application deployment infrastructure and distributed systems design starting right from zero. Check it out.

Recent Posts

- System Design Case Study #5: In-Memory Storage & In-Memory Databases – Storing Application Data In-Memory To Achieve Sub-Second Response Latency

- System Design Case Study #4: How WalkMe Engineering Scaled their Stateful Service Leveraging Pub-Sub Mechanism

- Why Stack Overflow Picked Svelte for their Overflow AI Feature And the Website UI

- A Discussion on Stateless & Stateful Services (Managing User State on the Backend)

- System Design Case Study #3: How Discord Scaled Their Member Update Feature Benchmarking Different Data Structures

CodeCrafters lets you build tools like Redis, Docker, Git and more from the bare bones. With their hands-on courses, you not only gain an in-depth understanding of distributed systems and advanced system design concepts but can also compare your project with the community and then finally navigate the official source code to see how it’s done.

Get 40% off with this link. (Affiliate)

Follow Me On Social Media