In this system design series, I discuss the intricacies of designing distributed scalable systems and the related concepts. This will help you immensely with your software architecture and system design interview rounds, in addition to helping you become a better software engineer.

Here is the first post of this series (CDN and load balancers), if you haven’t read it. I recommend reading it first. If you wish to master the art of designing large-scale distributed systems starting from the bare bones, check out the zero to software architect learning track, that I’ve authored.

With that being said. Let’s get on with it.

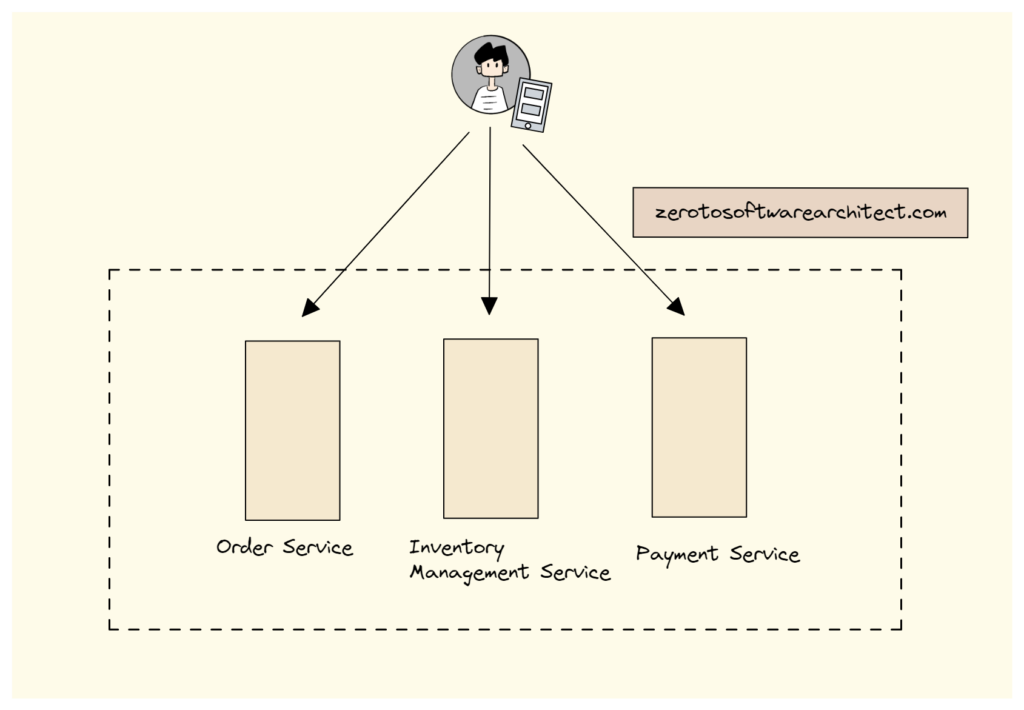

Picture a scenario where we have several microservices running on the backend and through the load balancer, the client directly hits every service with its specific endpoint to communicate with it. It’s more like direct client-to-microservice communication.

Now if we need to evolve our microservices, make changes to the APIs and such, we have to update the client as well. This means the client is tightly coupled with microservices. Also, what if a few microservices operate using protocols other than HTTP, like AMQP or binary protocols in addition to different message formats? How will the client interact with those services?

To tackle these scenarios, we can leverage an API gateway. API gateways besides helping with these specific use cases have several additional upsides . Let’s find out what.

What is an API gateway?

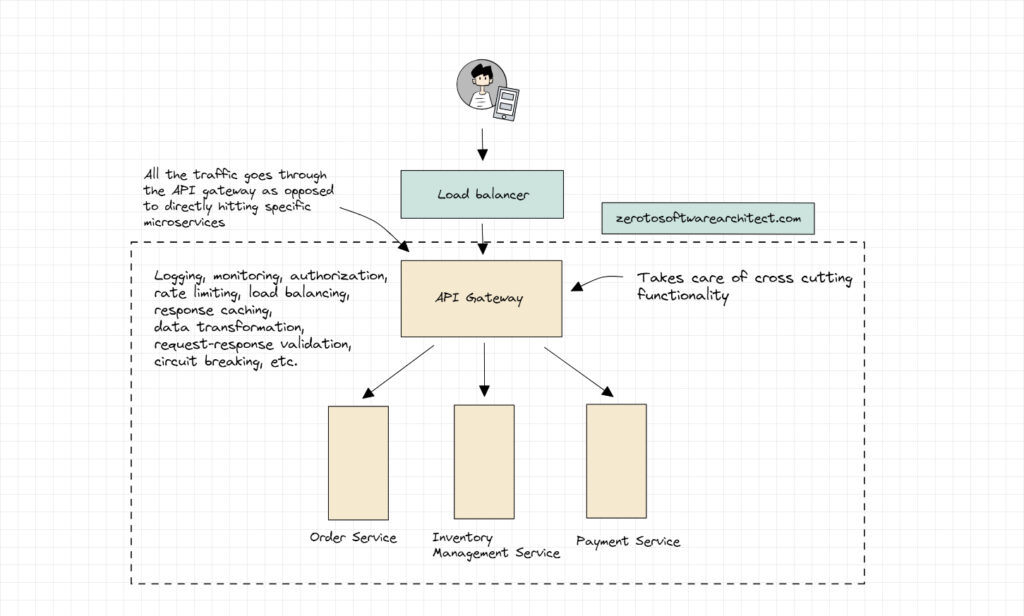

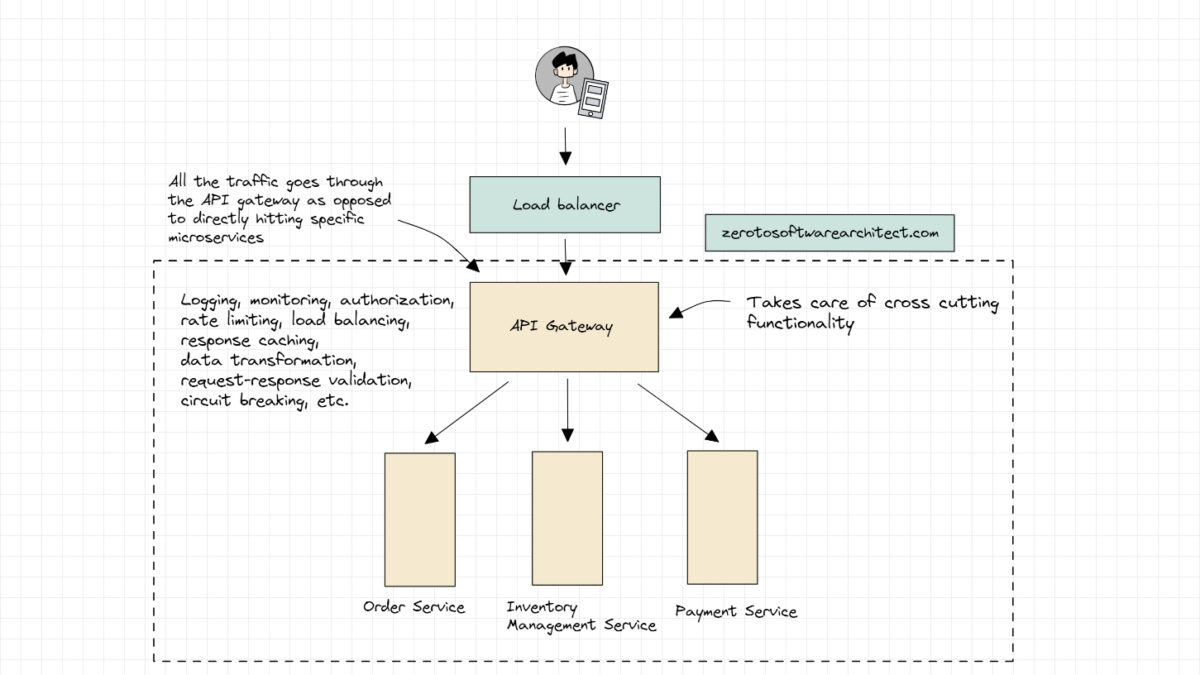

As the name implies, it is a component that acts as a gateway for all the traffic hitting the backend (comprising several microservices) providing secure access to our services via a REST API.

An API gateway abstracts the implementation of the services providing a consistent API. The end user/client wouldn’t know which service or machine their request would hit. This enables us to change the service implementation at the backend without impacting the client. This is similar to a static anycast-based IP which is consistent for all its machines. I’ve discussed it in my previous system design post.

We can have multiple API gateways as well in our system architecture but more on that later.

Besides routing the traffic through a gateway, an API gateway also provides cross-cutting functionality like logging, monitoring, authorization, implementation of other security rules, rate limiting, load balancing, response caching, data transformation (XML to JSON and back), request-response validation, circuit breaking, business logic deployment etc. to manage the traffic. The services running on the backend have their APIs configured with the API gateway.

With the API gateway implemented, the API clients like apps, browsers, third-party API consumers etc., do not have any information on the backend services that may be deployed with serverless compute, on containers or virtual machines. All clients have is the API gateway url and the request parameters specific to the response they need. The gateway routes the requests to the right services based on the data passed in the request.

With this, a serverless deployment can be migrated to a virtual machine deployment without affecting the client.

Let’s understand the features of an API gateway in a bit more detail.

API gateway features

Request aggregation

If a single page of an application needs interaction with multiple microservices to load its various components, as opposed to sending multiple requests to individual microservices, we can leverage an API gateway to receive only a single page load request from the client.

This approach is common when dealing with micro-frontends. I’ve deeply explored it in my Web architecture 101 course. Check it out here.

The API gateway will, in turn, send out requests to respective microservices, aggregate the data and return the response in a single request-response cycle. This will cut down the bandwidth consumption and latency.

Saving complexity and development time by applying cross-cutting concerns at a centralized place

If it weren’t for the functionalities provided by API gateways, such as logging, authorization, response caching, security, etc., we would have to implement them with every microservice, which would overly complicate the system in addition to spiking the development time.

With an API gateway, we can apply all these functionalities to the traffic coming into the system at a central location.

Request transformation

An API gateway makes it possible for a client sending an HTTP request to interact with a backend service leveraging a different protocol not supported by the client, such as AMQP.

An API Gateway can translate a RESTful JSON over HTTP request into AMQP or gRPC request adhering to the rules specified. This improves the system’s flexibility.

API gateway deployment

API gateways typically have integrated load-balancing ability. So for minimal system architectures running only in a certain cloud region, we can just go ahead with an API gateway directly interacting with the CDN as opposed to having the traffic routed through a load balancer.

However, if the API gateway is deployed across cloud regions for availability and reduced latency, we would need a separate load balancer to distribute traffic to API gateways across the globe. A global load balancer in this scenario will be effective.

I’ve discussed load balancing in my former post here. If you haven’t read it, it’s a highly recommended read. If you wish to take a detailed deep dive into the infrastructure on which our distributed applications run, improving your systems knowledge starkly. Check out my cloud computing course here, a part of my Zero to software architect learning track.

Now, since an API gateway can typically perform load balancing as well, can we use it as an alternative to a load balancer?

Is an API gateway an alternative to a load balancer?

Though an API gateway can perform load balancing, load balancers and API gateways cannot be used interchangeably. Both components have dedicated functionalities and are often used in conjunction to achieve a desired application behavior. An API gateway acts more like a proxy server as opposed to acting as a load balancer.

API gateways operate on layer 7 (application layer) of the OSI model. In contrast, depending on the use case, besides working on layer 7, load balancers can work on layer 4 (transport layer) of the OSI model as well.

In addition, load balancers are designed to and inherently handle significant traffic compared to API gateways since they are up the hierarchy, closer to the client.

API gateways offered by various cloud providers have their intricacies. Mostly, you’ll find there is a limit on the number of requests they can handle in a stipulated time, unlike load balancers.

Again, above all, it depends on the use case. If the traffic is limited and the app is deployed in a single cloud region, we may go ahead without any dedicated load balancers implemented, as I discussed above.

Right! and what about service meshes? Doesn’t a service mesh offers the same functionality which is applying cross-cutting concerns to microservices? What’s the difference between an API gateway and a service mesh? Can they be used interchangeably?

I’ve you are not aware of what a service mesh is? I’ve discussed that in my distributed systems design course along with the intricacies of inter microservice communication and more. Check it out.

What is the difference between an API gateway and a Service mesh?

A service mesh may have some overlap with the API gateway in functionalities, but they are not alternatives to each other and cannot be used interchangeably. They can be used in conjunction to achieve a desired system behavior, just like with the API gateways and load balancers.

An API gateway is a public-facing component, whereas a service mesh handles internal communication between microservices handling cross-cutting functionalities. Both components have dedicated responsibilities.

API Gateways are immensely useful, but they also introduce a single point of failure in the architecture. If something were to happen with the gateway, the entire backend could take a hit. Discussion on how we can leverage the Backends for Frontends pattern to avert the API gateway from becoming a system bottleneck is here in my next blog post.

If you found the content helpful, check out the Zero to Software Architect learning track, a series of three courses I have written intending to educate you, step by step, on the domain of software architecture and distributed system design. The learning track takes you right from having no knowledge in it to making you a pro in designing large-scale distributed systems like YouTube, Netflix, Hotstar, and more.

Consider sharing this post with your network for better reach. You can also subscribe to my newsletter to get the content I publish in your inbox. I am Shivang, you can read more about me here. I’ll see you in the next blog post, until then, Cheers.

Shivang

Related posts

Zero to Software Architecture Proficiency learning path - Starting from zero to designing web-scale distributed services. Check it out.

Master system design for your interviews. Check out this blog post written by me.

Zero to Software Architecture Proficiency is a learning path authored by me comprising a series of three courses for software developers, aspiring architects, product managers/owners, engineering managers, IT consultants and anyone looking to get a firm grasp on software architecture, application deployment infrastructure and distributed systems design starting right from zero. Check it out.

Recent Posts

- System Design Case Study #5: In-Memory Storage & In-Memory Databases – Storing Application Data In-Memory To Achieve Sub-Second Response Latency

- System Design Case Study #4: How WalkMe Engineering Scaled their Stateful Service Leveraging Pub-Sub Mechanism

- Why Stack Overflow Picked Svelte for their Overflow AI Feature And the Website UI

- A Discussion on Stateless & Stateful Services (Managing User State on the Backend)

- System Design Case Study #3: How Discord Scaled Their Member Update Feature Benchmarking Different Data Structures

CodeCrafters lets you build tools like Redis, Docker, Git and more from the bare bones. With their hands-on courses, you not only gain an in-depth understanding of distributed systems and advanced system design concepts but can also compare your project with the community and then finally navigate the official source code to see how it’s done.

Get 40% off with this link. (Affiliate)

Follow Me On Social Media