Monzo Architecture – An Insight Into the Backend Infrastructure of a Modern Digital Bank

Monzo is a digital, mobile-only bank based in the United Kingdom. It is one of the earliest app-based digital banks in the UK. Monzo set a record for the quickest crowd-funding campaign in history raising 1 million pounds in 96 seconds via the CrowdCube investment platform. This year it announced to set foot in the United States.

This write-up is an insight into the backend infrastructure of Monzo, we’ll have a look into the tech stack they leverage to scale their service to the millions of their customers online.

So, without further ado. Let’s get on with it.

Distributed Systems

For a complete list of similar articles on distributed systems and real-world architectures, here you go

Introduction

A few key things for a Fintech service are: the service has to be available 24/7, has to be consistent, extensible, performant to handle concurrent transactions, execute daily batch processes, and fault-tolerant with no single points of failure.

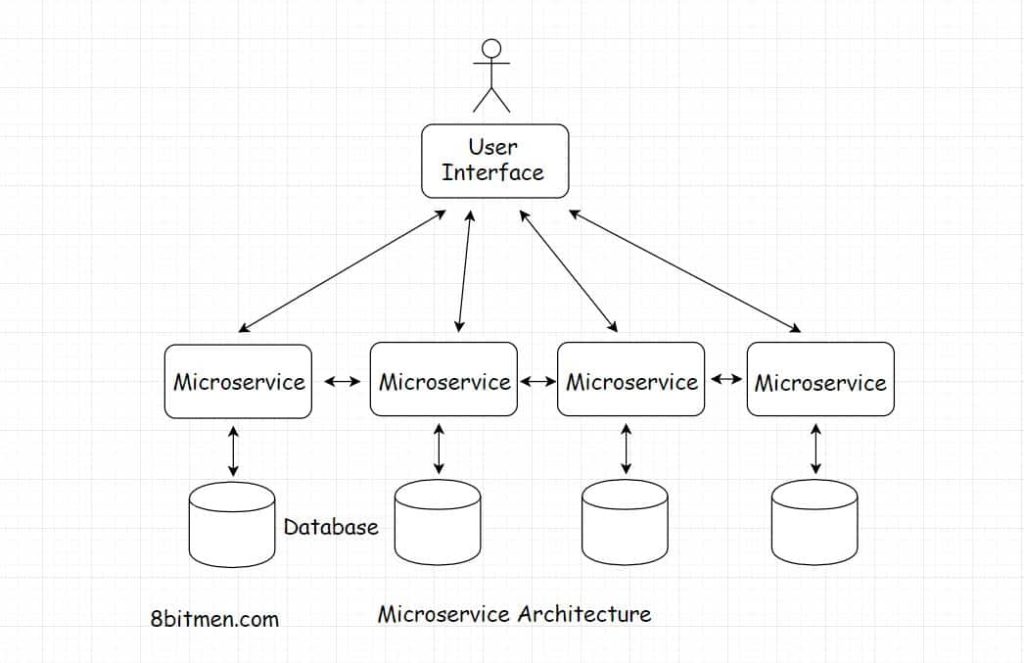

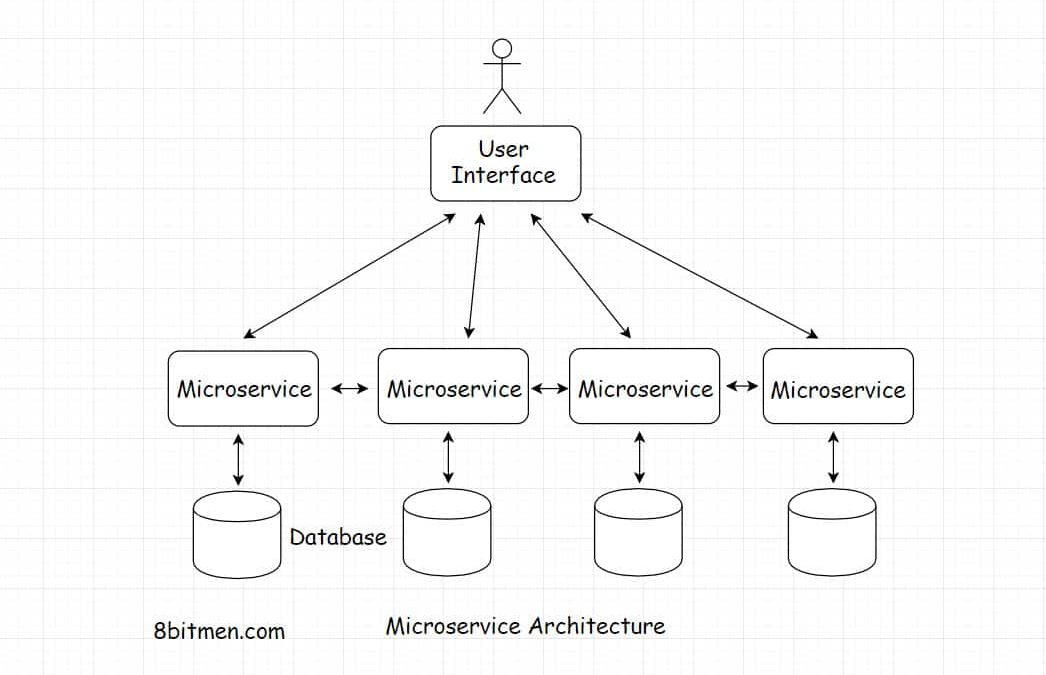

Keeping all these things in mind and other use case requirements the developers at Monzo, right from the outset, chose the microservice architecture over a monolithic one.

Microservices enable businesses to scale, stay loosely coupled, move fast, and stay highly available. Besides this with microservices teams can take ownership of individual services, roll out new features in less time without stepping over each other’s feet. The dev team at Monzo also learned from the experiences of large-scale internet services like Twitter, Netflix, and Facebook that a monolith is hard to scale.

Read: How Uber scaled from a monolith to a microservice architecture

Since the business wanted to operate in multiple segments of the market having a distributed architecture was the best bet. The beta version was launched with about 100 services.

Key Areas To Focus In the System Development & Production Deployment

To ensure a smooth service, there were four primary areas to focus on. Cluster management, Polyglot services, RPC transport, Asynchronous messaging.

Cluster management

A large number of servers had to be managed with efficient work distribution and contingencies for machine failure. The system had to be fault-tolerant and elastic. Multiple services could run on a single host to make the most out of the infrastructure.

The traditional approach of manually partitioning the service wasn’t scalable and tedious. They relied on a cluster scheduler for the efficient distribution of the tasks across the cluster based on resource availability and other factors.

After running Mesos and Marathon for a year, they switched to Kubernetes with Docker containers. The entire cluster ran on AWS. The switch to Kubernetes cut down their deployment costs by upto 65 to 70%. Prior to Kubernetes, they ran Jenkins hosts that were inefficient and expensive.

Polyglot Services

The team used Go to write their low-latency and highly concurrent service. Having a microservice architecture enabled them to leverage other technologies.

For sharing data across the services they used Etcd, it’s an open-source distributed key-value store, written in Go, that enables the microservices to share data in a distributed environment. Etcd handles leader elections during network partitions and has tolerance for machine failure.

RPC

Since the services were implemented with varied technologies, to facilitate efficient communication between them developers at Monzo wrote an RPC layer using Finagle & used Linkerd as a service mesh.

The layer has features like load balancing, automatic retries in case of service failure, connection pooling, routing the requests to the pre-existing connections as opposed to creating new ones and splitting & regulating the traffic load on a service for testing.

Finagle has been used in production at Twitter for years and is battle-tested. Linkerd is a service mesh for Kubernetes

Asynchronous Messaging

Asynchronous behavior features are commonplace in modern web apps. In the Monzo app, push notifications, payment processing pipeline, loading the user’s feed with transactions all happened asynchronously powered by Kafka.

The distributed design of Kafka enabled the team to scale the async messaging architecture on the fly, keep it highly available, keep the messaging data persistent to avoid data loss in case of a message queue failure. The messaging implementation also enabled the developers to go back in time, and have a look at the events that occurred in the past in the system from a point in time.

To educate yourself on software architecture from the right resources, to master the art of designing large-scale distributed systems that would scale to millions of users, to understand what tech companies are really looking for in a candidate during their system design interviews. Read my blog post on master system design for your interviews or web startup.

Cassandra as a Transactional Database

Monzo uses Apache Cassandra as a transactional database for the presently running 150+ microservices hosted on AWS. Well, this got me thinking. For managing transactional data, two things are vital ACID & strong consistency.

Apache Cassandra is an eventual consistent wide-column NoSQL datastore, that has a distributed design and is built for scale.

How exactly Apache Cassandra handles the transactions?

Well, first, picking a technology largely depends on the use case. I searched around a bit. It appears we can pull off transactions with Cassandra but there are a lot of ifs and buts. Cassandra transactions are not like regular RDBMS ACID transactions.

This & this StackOverflow threads & this YugaByte DB article are a good read on it.

Well, Folks! This is pretty much it. If you liked the write-up, share it with your network for better reach. I am Shivang, you can read more about me here.

Check out the Zero to Software Architecture Proficiency learning path, a series of three courses I have written intending to educate you, step by step, on the domain of software architecture and distributed system design. The learning path takes you right from having no knowledge in it to making you a pro in designing large-scale distributed systems like YouTube, Netflix, Hotstar, and more.

Shivang

Related posts

Zero to Software Architecture Proficiency learning path - Starting from zero to designing web-scale distributed services. Check it out.

Master system design for your interviews. Check out this blog post written by me.

Zero to Software Architecture Proficiency is a learning path authored by me comprising a series of three courses for software developers, aspiring architects, product managers/owners, engineering managers, IT consultants and anyone looking to get a firm grasp on software architecture, application deployment infrastructure and distributed systems design starting right from zero. Check it out.

Recent Posts

- System Design Case Study #5: In-Memory Storage & In-Memory Databases – Storing Application Data In-Memory To Achieve Sub-Second Response Latency

- System Design Case Study #4: How WalkMe Engineering Scaled their Stateful Service Leveraging Pub-Sub Mechanism

- Why Stack Overflow Picked Svelte for their Overflow AI Feature And the Website UI

- A Discussion on Stateless & Stateful Services (Managing User State on the Backend)

- System Design Case Study #3: How Discord Scaled Their Member Update Feature Benchmarking Different Data Structures

CodeCrafters lets you build tools like Redis, Docker, Git and more from the bare bones. With their hands-on courses, you not only gain an in-depth understanding of distributed systems and advanced system design concepts but can also compare your project with the community and then finally navigate the official source code to see how it’s done.

Get 40% off with this link. (Affiliate)

Follow Me On Social Media