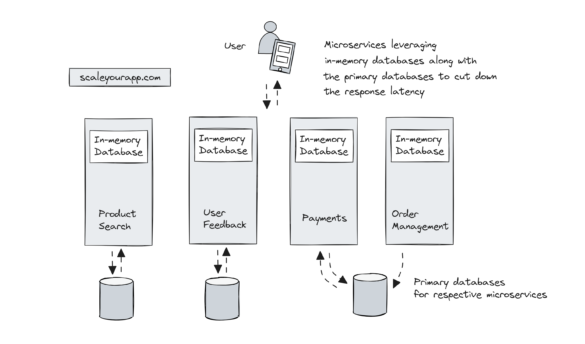

System Design Case Study #5: In-Memory Storage & In-Memory Databases – Storing Application Data In-Memory To Achieve Sub-Second Response Latency

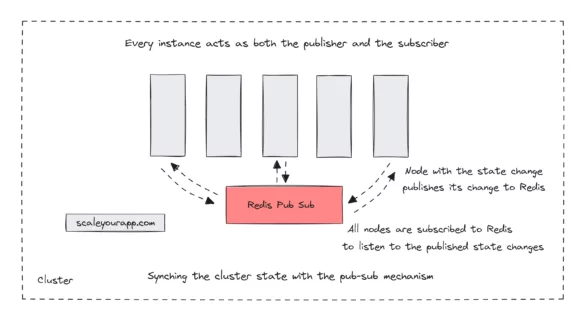

System Design Case Study #4: How WalkMe Engineering Scaled their Stateful Service Leveraging Pub-Sub Mechanism

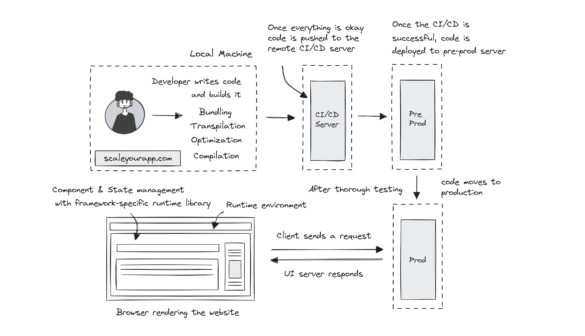

Why Stack Overflow Picked Svelte for their Overflow AI Feature And the Website UI

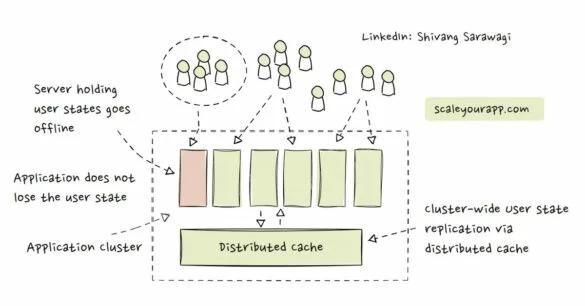

A Discussion on Stateless & Stateful Services (Managing User State on the Backend)

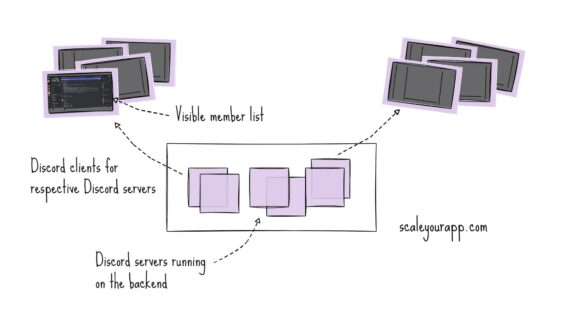

System Design Case Study #3: How Discord Scaled Their Member Update Feature Benchmarking Different Data Structures

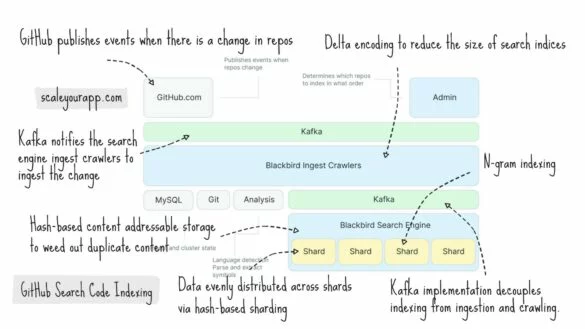

System Design Case Study #2: How GitHub Indexes Code For Blazing Fast Search & Retrieval

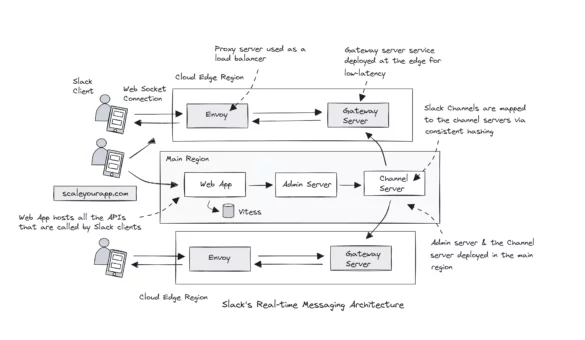

System Design Case Study #1: Exploring Slack’s Real-time Messaging Architecture

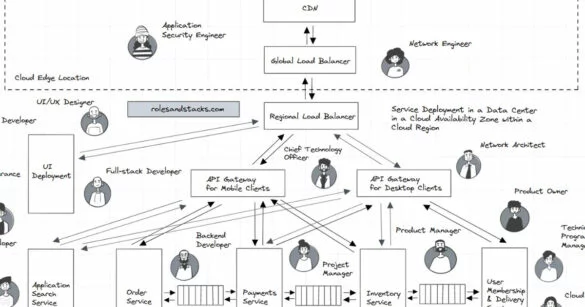

Web Service & Associated IT Roles

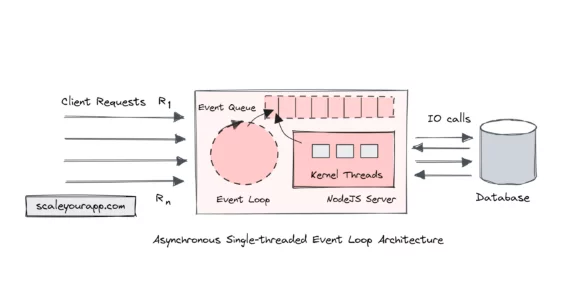

Single-threaded Event Loop Architecture for Building Asynchronous, Non-Blocking, Highly Concurrent Real-time Services

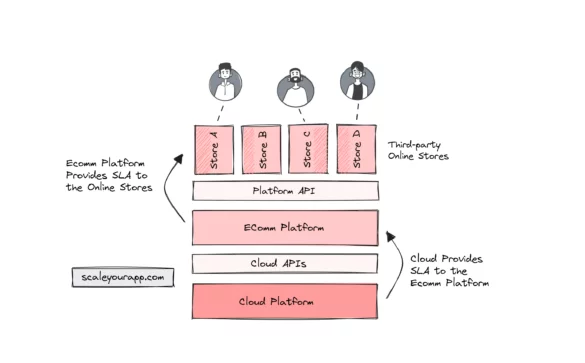

Understanding SLA (Service Level Agreement) In Cloud Services: How Is SLA Calculated In Large-Scale Services?

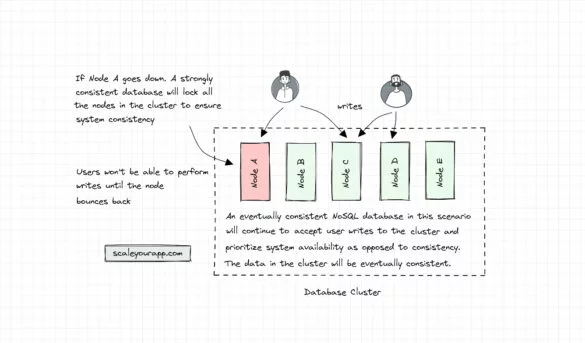

Database Architecture – Part 2 – NoSQL DB Architecture with ScyllaDB (Shard Per Core Design)

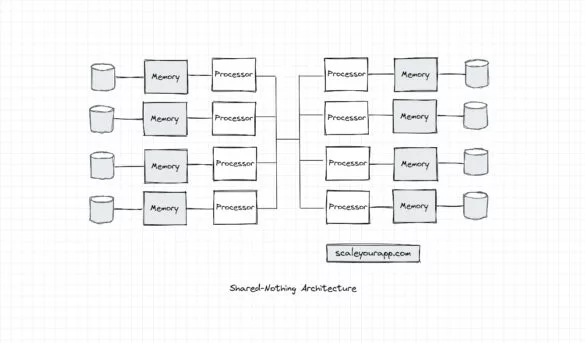

Parallel Processing: How Modern Cloud Servers Leverage Different System Architectures to Optimize Parallel Compute

Zero to Software Architecture Proficiency learning path - Starting from zero to designing web-scale distributed services. Check it out.

Master system design for your interviews. Check out this blog post written by me.

Zero to Software Architecture Proficiency is a learning path authored by me comprising a series of three courses for software developers, aspiring architects, product managers/owners, engineering managers, IT consultants and anyone looking to get a firm grasp on software architecture, application deployment infrastructure and distributed systems design starting right from zero. Check it out.

Recent Posts

- System Design Case Study #5: In-Memory Storage & In-Memory Databases – Storing Application Data In-Memory To Achieve Sub-Second Response Latency

- System Design Case Study #4: How WalkMe Engineering Scaled their Stateful Service Leveraging Pub-Sub Mechanism

- Why Stack Overflow Picked Svelte for their Overflow AI Feature And the Website UI

- A Discussion on Stateless & Stateful Services (Managing User State on the Backend)

- System Design Case Study #3: How Discord Scaled Their Member Update Feature Benchmarking Different Data Structures

CodeCrafters lets you build tools like Redis, Docker, Git and more from the bare bones. With their hands-on courses, you not only gain an in-depth understanding of distributed systems and advanced system design concepts but can also compare your project with the community and then finally navigate the official source code to see how it’s done.

Get 40% off with this link. (Affiliate)

Follow Me On Social Media